AI techniques have tremendous potential. They are growing quickly and gradually, infiltrating all professions and aspects of our society. AI also raises important questions about safety and about social and environmental responsibility.

AI can facilitate malicious acts such as spreading fake news or cyberattacks. New safety questions have arisen around how we can be sure that AI will do what we expect it to. There are other issues regarding the use of data, particularly personal or biased data. And there are also considerations about AI’s environmental impact.

We will explore these issues in more detail in this chapter and the next. In each chapter, we will suggest some things to consider to ensure that you remain vigilant and behave responsibly. At the end of these two chapters, we’ll see how AI governance can encourage or even compel businesses to develop safe, responsible, and trustworthy AI systems. Are you ready?

Explore Malicious AI Usage

AI is a tool that provides a service to humans. As we’ve seen, we can use it to make great strides in many sectors, such as healthcare, education and the environment. It can, however, facilitate the work of people with more malicious intentions. We’re going to explore two examples of malicious use of AI that you’re almost certain to come across—fake news and cyberattacks.

Fake News

You may have encountered weird or improbable videos circulating online: Barack Obama insulting Donald Trump or Mark Zuckerberg talking about manipulating Facebook users. These fake videos began appearing in 2018 and can seem startlingly real. The underlying technology leverages powerful artificial intelligence techniques. People refer to these synthetic media as deep fakes.

But didn’t fake videos exist well before artificial intelligence?

Yes, but in the past, only a handful of experts manipulated photos. Now a growing number of people can do it, and the results are more and more convincing. Visit the site This person does not exist to see portraits created using this artificial intelligence technique. It would be easy to mistake these artificially generated people for real humans.

However, AI fakery doesn't stop at amusing images. AI creates fake texts, videos, and so on. These new techniques bring risks, including widespread disinformation.

What can we do about it?

There are two tools you can use: common sense and critical thinking. To protect against deep fakes, arm yourself with these, along with a few pieces of advice:

Question your sources and make sure your information is legitimate.

Cross-check your information by checking other news websites.

You can also use fact-checking websites like FactCheck.org from The Annenberg Public Policy Center or Fact Checker from The Washington Post.

Cyberattacks

With the growing capabilities of AI and its new-found accessibility, cybercriminals are increasingly using AI to carry out cyberattacks. They use it to detect vulnerabilities in your devices or to automate phishing attacks, revealing personal data, passwords, or bank account details. Let’s look at some examples of possible, and indeed successful, cyberattacks.

Phishing Attacks

People can use AI to easily identify profiles of potential victims who are likely to click on fake links or to personalize emails using data captured either from previous data leaks or from social media platforms such as LinkedIn, Facebook, and Twitter.

Identity Theft

Cyberattacks can use the same faking techniques described above. A technology known as "deep voice" uses AI to impersonate a person's voice based on audio samples of their voice. Attackers can obtain these samples from recordings of online meetings or public speeches (readily available for journalists, politicians, or corporate executives).

In 2020, a manager at a Hong Kong bank received a call from his boss with very positive news: the company was planning to make a major acquisition, and to do this, the manager needed his bank's approval to make several transfers totaling $35 million. The employee recognized his boss's voice, thought everything was genuine, and sent money. However, the voice was artificial intelligence.

An End-to-End Cyberattack Using AI

The various forms of cyberattack discussed above are only a tiny fraction of the possibilities. Each is a threat in its own right, but they can also be combined to create attacks in which AI performs all the necessary actions from start to finish, whether it's target recognition, system intrusion, command execution, privilege elevation, or covert data exfiltration.

Explore the Challenges Associated With AI Safety

Artificial intelligence models based on machine learning are compelling but raise important safety issues: they don't always behave as intended, sometimes in dangerous ways. This safety issue is crucial in AI because these systems are becoming increasingly autonomous and are still largely unpredictable, unlike other objects such as cars. Companies and governments have invested very little in AI safety, but this could be one of the key challenges of the 21st century. AI safety covers three major issues: robustness, explainability, and definition of purpose. Let’s dive a little deeper into these concepts.

Issue No.1: Lack of AI Robustness

The robustness of an AI system indicates how reliable its behavior is in unknown situations—i.e., in cases it hasn’t encountered during training. But machine learning systems are based on statistical correlations rather than understanding reality. As a result, when reality changes and the correlations are no longer valid, the AI system may react inappropriately.

Practical example: Suppose someone trains an AI system to detect objects in images of dogs. It has been fed a training dataset consisting of images of different breeds of dogs. The system performs well and can correctly recognize and classify the other dog breeds in the provided images.

However, if the system is suddenly shown an image of an unknown animal that looks like a dog but is not a breed listed in its training dataset, it may struggle to classify it correctly. It doesn't fully understand the underlying reality of this unknown animal. The AI system may make an incorrect decision or give an unpredictable response.

A lack of robustness can be dangerous. This is because some cyberattacks are designed to exploit this vulnerability by fooling the AI model with tiny adjustments to its input data. For example, an attacker could use stickers or paint on road signs to target the AI in autonomous vehicles and affect how it interprets the signs. This could result in the AI system misinterpreting a stop sign as a yield sign and putting passengers at risk.

The two images of the STOP sign look the same to the human eye, but a few changes invisible to the naked eye are enough to change how the AI model interprets them.

Issue No.2: AI's Lack of Explainability

The explainability and transparency of an AI system enable a human to understand and analyze how it works to ensure that it functions in the desired way. Today, however, most AI systems that use machine learning are "black boxes" that operate autonomously without anyone knowing how or why. Machine learning AI systems use data and statistical reasoning methods to learn correlations. However, these correlations do not necessarily indicate a causal link.

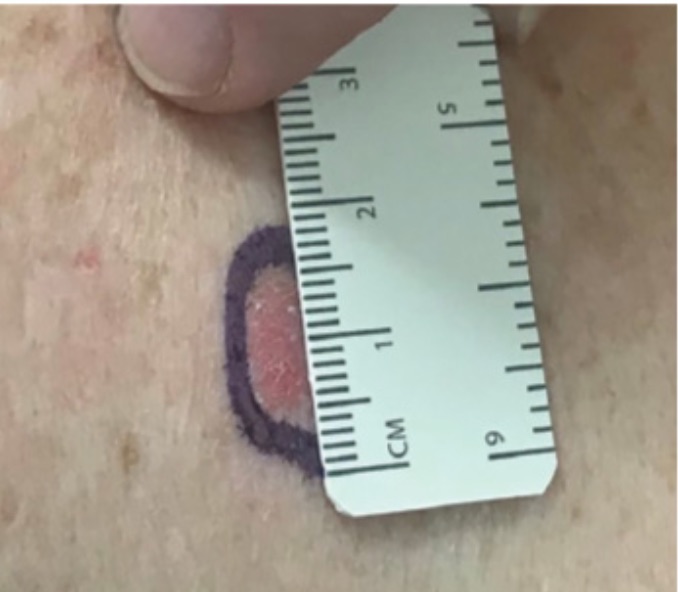

In the case of the automated skin lesion classification algorithm, greater explainability and transparency enable humans to understand how the AI model works and detect when it's not working properly.

Issue No.3: Defining the Right Objectives for an AI System

When a model interacts with people, it has to carry out actions or make decisions that impact the world. Therefore, we must define its objectives properly, or actions and decisions will not meet our expectations.

In practice, however, it is challenging to translate the complexity and nuance of human objectives into computer language. It is easy for a machine to misunderstand the intent behind human instructions by applying them too literally.

For example, by telling an AI not to lose a game of Tetris, the model identified the best way of complying with the instruction without meeting the designers' expectations: it pauses the game as soon as it thinks it will lose. In a less trivial example, many online video platforms try to suggest videos "the user wants to see" by giving the AI the objective of offering videos that the user will watch from start to finish. However, defining the AI's aim in this way causes the algorithm to favor short, sensational videos or reflect the user's strong opinions. The user is more likely to watch them from start to finish, but they still need to see the videos they want most. How do you explain to a machine what you mean by "videos the user wants to see"? It's not that simple.

Let’s Recap!

AI can be used against you for malicious purposes. Use your critical thinking skills to protect yourself from fake news and cyberattacks. If something seems suspicious, take a little time to check and cross-reference your sources.

AI raises many safety issues and doesn’t always behave how we want it to. AI safety is a major research topic that is currently undergoing considerable growth. Governance and regulation are also required to limit the risks associated with AI.

In the next chapter, we will look at social and environmental responsibility challenges around the use of artificial intelligence.